From 3 days to 5 minutes: How Docker Compose saves my client €150,000 a year

Management Summary

In modern software development, setting up development, test and acceptance environments costs an average of 2-3 days per team and release cycle. For a 7-person development team, this adds up to over €150,000 per year - pure waiting time, not added value.

With a well thought-out Docker Compose setup, this process can be reduced to less than 5 minutes. This article uses a real SaaS project to show how this works - technically sound, but pragmatically feasible.

Key Takeaways:

- ROI: 99% time saving during environment setup

- Infrastructure as code: Consistency from Dev to Production

- Field-tested setup for complex multi-service architectures

- Security hardening without complexity overhead

The Monday when nothing worked

It's 9:00 a.m., Monday morning. The development team is ready, sprint planning is complete, the user stories have been prioritized. Then the announcement: "We have to set up the new test environment before we can get started."

Anyone who works in software development knows what follows:

The first developer installs Postgres - version 16, because that is the latest version. The second sets up Redis - with different configuration parameters than in production. The third struggles with MinIO because the bucket names are not correct. And the DevOps engineer is desperately trying to get Traefik up and running while everyone else waits.

On Tuesday lunchtime, everything is finally up and running. Almost. The versions do not match, the configuration differs from production, and the next update starts all over again.

The bill is brutal:

- 7 developers × 16 hours × €80/hour = €8,960 for ONE environment

- × 3 environments (dev, test, acceptance) = €26,880

- × 6 release cycles per year = €161,280

And these are just the direct costs. Not included: Frustration, context switches, delayed releases.

The solution: Infrastructure as code - but the right way

"Why don't you just use Docker?" - A legitimate question, but it falls short. Docker alone does not solve the problem. Kubernetes? Overkill for most projects and often more complex than the original problem.

The answer lies in the middle: Docker Compose as "Infrastructure as Code Light".

With a well-structured Docker Compose setup, three days of setup hell becomes a single command:

git clone project-repo

docker compose --profile dev up -d5 minutes later: All services are running, consistently configured, with the correct versions.

Sounds too good to be true? Let's take a look at what it looks like in practice.

The stack: a real SaaS example

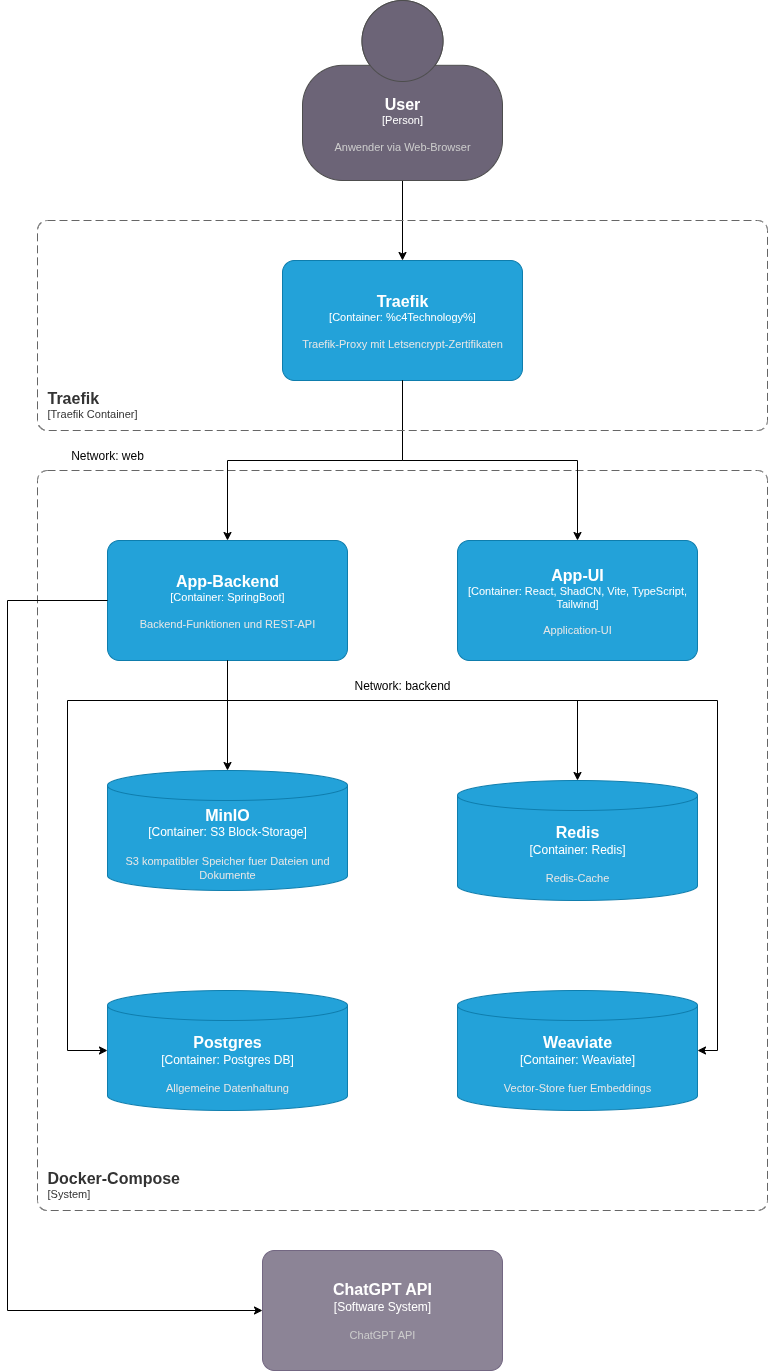

Our example comes from a real project - a modern SaaS application with typical microservice architecture:

Front-end tier:

- Traefik as a reverse proxy and SSL termination with Let's Encrypt

- React UI with Vite, TypeScript, ShadCN and TailwindCSS

Backend tier:

- Spring Boot Microservice as API layer

- PostgreSQL for relational data

- Weaviate Vector-DB for embeddings and semantic search

- Redis for caching and session management

- MinIO as S3-compatible object storage

- OpenAI API integration for AI-supported features

Eight services, three databases, AI integration, complex dependencies. Exactly the type of setup that normally takes days.

This is what it looks like in practice:

Illustration: All services run in Docker Compose with strict network separation between the web and backend layers.

The entire setup starts with a single command: docker compose up -d_

The secret: profiles and environment separation

The key lies in the intelligent use of Docker Compose Profiles. We distinguish between two modes:

Dev profile: Only backend services run in the container

- Frontend runs locally (hot reload, fast development)

- Databases and external services containerized

- Ports exposed for direct access with GUI tools

Prod profile: Full stack

- All services containerized

- Traefik routing with SSL

- Network isolation between web and backend

- Security hardening active

A simple export COMPOSE_PROFILES=dev decides which services start. No separate compose file, no duplication, no inconsistencies.

The underestimated game changers

Beyond the obvious advantages, there are three aspects that make the biggest difference in practice:

1. the "clean slate" at the touch of a button

Everyone knows the problem: the development environment has been running for weeks. Tests have been run, data has been changed manually, debugging sessions have left their mark. Suddenly the system behaves differently than expected - but is it the code or the "dirty" data?

With Docker Compose:

docker compose down -v

docker compose up -d30 seconds later: A completely fresh environment. No legacy issues, no artifacts, no "but it works for me". That's the difference between "let's give it a try" and "we know it works".

2. automatic database setup with Flyway

The database does not start empty. Flyway migrations run automatically at startup:

- Schema changes are imported

- Seed data for tests are loaded

- Versioning of the DB structure is part of the code

A new developer not only receives a running DB, but a DB in exactly the right state - including all migrations that have taken place in recent months.

3. adjust production errors - in 5 minutes

"The error only occurs with customers who are still using version 2.3." - Every developer's nightmare.

With Docker Compose:

export CV_APP_TAG=2.3.0

export POSTGRES_TAG=16

docker compose up -dThe exact production environment runs locally. Same versions, same configuration, same database migrations. The error is reproducible, debuggable, fixable.

No VM, no complex setup, no week of preparation. The support call comes in, 5 minutes later the developer is debugging in the customer's exact setup.

Traefik: More than just a reverse proxy

A detail that is often overlooked: Traefik runs in a separate container and is not part of the service stack definition. This has a decisive advantage.

In small to medium-sized production environments, this one Traefik container can serve as a load balancer for several applications:

# One traefik for all projects

traefik-container → cv-app-stack

→ crm-app-stack

→ analytics-app-stackInstead of configuring and maintaining three separate load balancers (or three nginx instances), a single Traefik manages all routing rules. SSL certificates? Let's Encrypt takes care of it automatically - for all domains.

The setup scales elegantly: from one project on one server to several stacks - without having to change the architecture.

Security: Pragmatic instead of dogmatic

"But aren't open database ports a security risk?" - Absolutely justified question.

The answer lies in the context: dev and test are not production.

In development and test environments:

- Ports are deliberately exposed for debugging

- Developers use GUI tools (DBeaver, RedisInsight, MinIO Console)

- The environments run in isolated, non-public networks

- Trade-off: developer productivity vs. theoretical risk

In production:

- Access only via VPN or bastion host

- Strict firewall rules

- Monitoring and alerting

- The same compose file, different environment variables

Here, the mistake is often made of securing dev environments like Fort Knox, while developers then struggle with SSH tunnels and port forwarding. The result: wasted time and frustrated teams.

The trick is to find the right trade-off.

Security hardening: where it counts

Where we make no compromises: Container security.

Every service runs with it:

Read-only file system: Prevents manipulation at runtime

Non-root user (UID 1001): Minimum privileges

Dropped Capabilities:

cap_drop: ALL- only what is really neededNo new privileges: Prevents privilege escalation

tmpfs for temporary data: Limited, volatile memory

cv-app: user: "1001:1001" read_only: true tmpfs: - /tmp:rw,noexec,nosuid,nodev,size=100m security_opt: - no-new-privileges:true cap_drop: - ALL

This is not overhead - it is a standard configuration that is set up once and then applies to all environments.

Network Isolation: Defense in Depth

Two separate Docker networks create clear boundaries:

Web-Network: Internet-facing

- Traefik Proxy

- UI service

- API gateway

Backend-Network: Internal only

- Databases (Postgres, Redis, Weaviate)

- Object Storage (MinIO)

- Internal services

A compromised container in the web network has no direct access to the databases. Defense in Depth - implemented in practice, not just on slides.

Environment variables: The underestimated problem

An often overlooked aspect: How do you manage configuration across different environments?

The bad solution: Passwords in the Git repository The complicated solution: Vault, secrets manager, complex toolchains The pragmatic solution: Stage-specific .env files, outside of Git

.env.dev # For local development

.env.test # For test environment

.env.staging # For acceptance

.env.prod # For production (stored in encrypted form)A simple source .env.dev before the docker compose up - ready.

For production: Secrets injected via CI/CD pipeline or stored encrypted with SOPS/Age. But not every environment needs enterprise-grade secrets management.

The workflow in practice

New developer in the team:

git clone project-repo

cp .env.example .env.dev

# Enter API keys

docker compose --profile dev up -d4 commands, 5 minutes - the developer is productive.

Deployment in Test:

ssh test-server

git pull

docker compose --profile prod pull

docker compose --profile prod up -d --force-recreateZero-downtime deployment? Health checks in the compose file take care of this:

healthcheck:

test: ["CMD-SHELL", "curl -f http://localhost:8080/actuator/health || exit 1"]

interval: 30s

timeout: 10s

retries: 3

start_period: 60sDocker waits until services are healthy before stopping old containers.

Lessons learned: What I would do differently

After several projects with this setup - some insights:

1. invest time in the initial compose file The first 2-3 days of setup will pay off a hundredfold over the course of the project. Every hour here saves days later.

2. health checks are not optional Without health checks, you may start services before the DB is ready. This leads to mysterious errors and debugging sessions.

3. version pinning is your friendpostgres:latest is convenient - until an update breaks everything. postgres:17 creates reproducibility.

4. document your trade-offs Why are ports open? Why this approach instead of that? You (and your team) will thank you in the future.

5. one compose file, multiple stages The attempt to maintain separate compose files for each environment ends in chaos. One file, controlled via Env variables and profiles.

The ROI: convincing figures

Back to our Monday morning scenario. With Docker Compose:

Before:

- Setup time: 16-24 hours per developer

- Inconsistencies between environments: frequent

- Debugging of environmental problems: several hours per week

- Costs per year: €160,000+

After:

- Setup time: 5 minutes

- Inconsistencies: virtually eliminated

- Debugging of environmental problems: rare

- Costs for initial setup: approx. 16 hours = €1,280

- Savings in the first year: €158,720

And that doesn't even take into account the soft factors: Less frustration, faster onboarding time, more time for features instead of infrastructure.

Who is it suitable for?

Docker Compose is not a Silver Bullet. It does not fit for:

- Highly scaled microservice architectures (100+ services)

- Multi-region deployments with complex routing

- Environments that require automatic scaling

It is perfect for:

- Start-ups and scale-ups up to 50 developers

- SaaS products with 5-20 services

- Agencies with several client projects

- Any team that wastes time with environment setup

The scaling path: from Compose to Kubernetes

An often underestimated advantage: Docker Compose is not a dead-end investment. If your project grows and needs real Kubernetes, migration is surprisingly easy.

Why? Because the container structure is already in place:

- Images are built and tested

- Environment variables are documented

- Network topology is defined

- Health checks are implemented

Tools such as Kompose automatically convert Compose files to Kubernetes manifests. The migration is not a rewrite, but a lift-and-shift.

The recommendation: Start with Compose. If you really need Kubernetes (not because it sounds cool at conferences, but because you have real problems), you have a solid basis for the migration. Until then: Keep it simple.

The first step

The question is not whether Docker Compose is the right tool. The question is: How much time is your team currently wasting on environment setup?

Do the math:

- Number of developers × hours for setup × hourly rate × number of setups per year

If the number is in four digits, the investment is worthwhile. If it's in five figures, you should have started yesterday.

About the author: Andre Jahn supports companies in optimizing their development processes and pragmatically implementing Infrastructure as Code. With over 20 years of experience in software development and DevOps, the focus is on solutions that actually work - not just on slides.

Digital sovereignty

Digital sovereignty is a hot topic in the media at the moment.

In fact, I am currently working on this professionally.

I'm always very much in favor of being as close to the user as possible - that's why I followed the "eat your own dog food" and became "digitally independent".

Instead of the Mac, I now use Ubuntu - I've had several attempts over the decades - and this time I've actually switched. The Mac stays, of course, because it runs programs that don't run on Ubuntu.

Result: Everything is ok, I usually work with web programs, so everything is fine.

Instead of Dropbox, I use Nextcloud, self-hosted.

Result: No difference, the files are synchronized in the same way as with DropBox, Google & Co.

I now use Proton Mail instead of Gmail. The emails were easy to import and the Proton web interface is ok. However, I use the calendar from NextCloud.

Result: Everything is running, no problems.

Instead of the GitLab service, I now use Gitea, self-hosted.

Result: No negative experiences so far. I still have to take care of build automation.

I now use Matermost instead of Slack. For the things I did with Slack, it works very well. The choice between Rocket Chat and Matermost wasn't easy for me.

Instead of DigitalOcean, I now use a server from Hetzner. It's actually much cheaper and so far there have been no problems.

Office packages: I never really liked them. But if I have to, I use Libre Office - the suffering is very comparable.

Conclusion: It works. The effort wasn't that great and productivity doesn't suffer.

Suddenly Product Owner

You are a new product owner for a product that you understand in principle - but don't really know yet.

The customer expects - and rightly so - immediate operational readiness. What to do?

To deliver cool new features to users, you first have to do one thing: experience the product from the user's perspective.

So: product installed, played around a bit. Discovered new terms and processes. Then created test data and imported it into the system via REST API - using Mockaroo, because I still had a subscription from the last project.

The API is not always easy to use - frustrating, but instructive, especially because it deals with very complex data.

I discover dependencies that are not visible in the UI. Many users also work directly with the API - that is valuable context.

At the same time: The UI helps you to understand. The imported data has to appear somewhere - so you click through, search and learn.

This type of familiarization creates a steep learning curve. Mistakes that I make are probably also made by many users. This is exactly where understanding arises - and the first ideas for real improvements.

Instructional videos? Only enough for the basics. If you really want to _understand_ a system, you have to do it yourself.

Online communities and support sites also provide valuable insights - real problems, real solutions.

And when you think you have found a problem? Then the next step is not to change it immediately. Perhaps the solution makes sense. Therefore: talk - with developers, architects, users. Understand how the system really "ticks".

Only those who experience the product themselves can develop it further in a meaningful way.

And one more thing: Be enthusiastic about your product - and treat users like good friends whom you really want to help.

Understand their goals, not just their desires. Because as Henry Ford said:

"If I had asked people what they wanted, they would have said: faster horses."

Automated development environments: Faster, safer and more cost-effective success

Automated development environments: The key to greater efficiency, security and scalability

In modern companies, the pressure to provide software more quickly is growing - and that with falling costs and strict security requirements. But how can development environments be designed in such a way that they are flexible, secure and efficient?

🔹 Faster onboarding processes: Developers are up and running in minutes, not days.

🔹 Cost efficiency: resources are only used when they are actually needed.

🔹 Maximum security: source code and data remain in the protected corporate environment.

🔹 Higher productivity: standardized tech stacks and automated pipelines allow developers to focus on the essentials.

I've had my own experiences with remote development tools like JetBrains Gateway and GitHub Codespaces - and the results are promising! Read my new article to find out how automated development environments can transform your business.

Automated development environments: Greater efficiency, better security and lower costs in corporate environments In many companies, there is increasing pressure to deliver software faster while reducing costs and complying with strict security requirements. Automated deployment of development environments is a promising approach to achieving these goals. This article shows how standardized development platforms and cloud-based resources can not only reduce costs, but also increase security and productivity in the development team.

1. why automated development environments?

Efficiency and speed

Fast provisioning: Cloud instances for developers can be set up and switched off again in just a few minutes. Companies only pay for as long as the instances are actually used.

More productive developers: As all the necessary tools and accesses are configured in advance, tedious manual setups are a thing of the past. New team members can start working almost immediately.

⠀Cost optimization

Resources on-demand: Instead of purchasing highly equipped laptops for all developers, CPU and memory resources in the cloud can be scaled as required.

Project-specific billing: Tagging and usage statistics make costs per team member and project visible down to the minute. This allows budgets to be planned and monitored in a targeted manner.

⠀Improved safety

Centrally managed access: Access data for source code repositories, databases and other resources are managed automatically and can be blocked immediately if necessary.

Confidential data remains within the company: Source code and production-related data never leave the secure company network. The risk of data leaks due to local copies on developer laptops is significantly reduced.

⠀

2. challenges and solutions

Standardized technology stack

To ensure smooth collaboration, a central administrator or a DevOps team should determine which technologies and versions (e.g. Java, Spring Boot, Angular, databases) are used. This avoids "uncontrolled growth" and promotes a homogeneous development landscape.

Automated provisioning

Centralized management: A self-service portal makes it possible to quickly provide new developer environments - including all the necessary tools (IDE, database access, etc.).

Integration with CI/CD: Standardized build pipelines and deployment scripts reduce waiting times. Instead of each project team maintaining its own scripts, everyone benefits from centralized best practices.

⠀Performance and user-friendliness

Low latency: For developers to be able to work as quickly in a cloud-based IDE as locally, a good choice of data center location and optimized remote protocols are crucial.

Customization options: Despite standardized configurations, certain customizations (e.g. additional libraries, test suites) should be possible in order to meet project-specific requirements.

⠀Maintenance and scaling

Dynamic resource adjustment: Depending on the project phase (development, testing, maintenance), the required performance can be increased or reduced.

Regular updates: As operating systems and tools are maintained centrally, security patches or version upgrades are rolled out quickly for all developers.

⠀

3. concrete process in the corporate context

1 Creating the project configurationAn administrator (or DevOps team) defines which technologies (e.g. Spring Boot, Angular, Kafka, MongoDB) are to be used and which security guidelines apply. 2 Allocation of resourcesBased on the requirements, it is determined how much CPU, memory and storage is available per developer. The costs incurred are allocated to the respective project. 3 Automatic setupWithin a few minutes, a dedicated development instance is provided for the new employee, including IDE and interfaces to Git, databases and other services. Access and credentials are managed centrally and provided to the developer automatically. 4 Ongoing operationThe system recognizes when resources are unused and can shut them down automatically to reduce costs. Developers work as usual in their IDE and do not have to worry about infrastructure details. 5 Leaving the projectIf a developer changes or leaves the company, access to code, databases and repositories is blocked centrally. This maintains control over all project resources - a crucial factor, especially in corporate environments.

⠀

4. advantages for the company

Cost transparency: minute-by-minute billing allows decision-makers to see where and how much resources are actually being used.

Security and compliance: source code and data remain in a protected environment. Access rights can be withdrawn immediately if required.

Rapid scalability: New employees or entire teams can be integrated into projects within a very short time.

Greater efficiency: Instead of setting up each development environment manually, everyone involved benefits from central standards and best practices.

⠀

Own experiences

The market now offers various solutions for remote development, including GitHub Codespaces, JetBrains Space and JetBrains Gateway. I have personally been experimenting with JetBrains Gateway for frontend and backend development for some time now. The experience has been consistently positive:

Almost local feeling: IntelliJ and WebStorm run remotely, but behave almost like on the local computer.

Terminal access: Work in the terminal takes place directly on the remote system and is pleasantly fast.

This type of development is particularly suitable for security or corporate environments in which local source code copies are problematic.

5 Conclusion and outlook

Automated development environments in the corporate environment offer an ideal opportunity to realize projects faster and more efficiently. Decision-makers benefit from:

Clear cost control

Strict safety standards

Accelerated time-to-market

Thanks to predefined processes and cloud-supported resources, the onboarding of new developers is simplified and the risk of inconsistent setups is reduced. In the future, self-service platforms are likely to become increasingly important for providing development environments at the touch of a button without having to go through manual approvals or lengthy setup processes. Topics such as Infrastructure as Code and GitOps will also continue to drive forward the seamless integration of development and operations. Recommendation: Companies that invest in an automated development platform at an early stage will gain decisive competitive advantages - through faster software releases, increased IT security and satisfied development teams.

Business analysis: the underestimated key to project success

Delays occur in many IT projects because interfaces have not been sufficiently analyzed. It is often assumed that existing documentation is correct and that all relevant information is known. However, it is often only late in the development process that it becomes apparent that key details are missing or incorrect, particularly in the case of interfaces.

Why do these problems occur?

There are many reasons for faulty interface analysis:

Documentation is often outdated or incomplete.

Specialist departments or external partners do not always have a complete understanding of all the technical details.

Developers assume that the information provided is correct until they encounter problems during implementation.

Changes in the architecture or requirements are not consistently followed up.

This means that interfaces are only tested during the development process. If problems then occur, the effort required to correct them is enormous. Either developers have to adapt the interfaces themselves afterwards or the project team returns to the business analysis to clarify missing or incorrect information. This results in delays, additional costs and an unnecessary burden for the entire team.

How can these problems be avoided?

In order to reduce these errors, a thorough examination of the interfaces should already be carried out during the business analysis. The following measures have proven effective:

1. test interfaces at an early stage

Instead of relying solely on documentation, interfaces should be tested directly during the analysis phase. Tools such as Postman, which can be used to easily validate REST interfaces, are ideal for this. An early check uncovers inconsistencies and ensures that errors are avoided later on.

2. use automatic documentation

Manual documentation is prone to errors and often not up to date. A sensible alternative is the use of OpenAPI or similar standards for automatic documentation. This offers several advantages:

The documentation always remains up-to-date as it is generated directly from the actual interface definitions.

Business analysts can specify new interfaces in OpenAPI format, creating a clear basis for developers.

Developers can generate code for the REST API directly from the OpenAPI documentation, which speeds up the development process and reduces sources of error.

3. establish a hands-on mentality in business analysis

Business analysis should not only consist of collecting and documenting requirements, but should also include active validation. This means

Think through interfaces and processes not only on paper, but try them out directly.

Early coordination with developers to clarify technical feasibility.

Use of modern tools to simulate and check APIs before the actual development begins.

Conclusion

A precise business analysis lays the foundation for the success of an IT project. Errors in the analysis often lead to problems in development that can only be corrected later with considerable effort. Early interface validation and automated documentation can minimize the risk considerably.

Companies that rely on practical and tool-supported business analysis benefit from shorter development times, fewer errors and a more efficient project process.

Have you already had experience with interface problems in projects? How do you deal with the validation of APIs in business analysis? I look forward to the exchange!